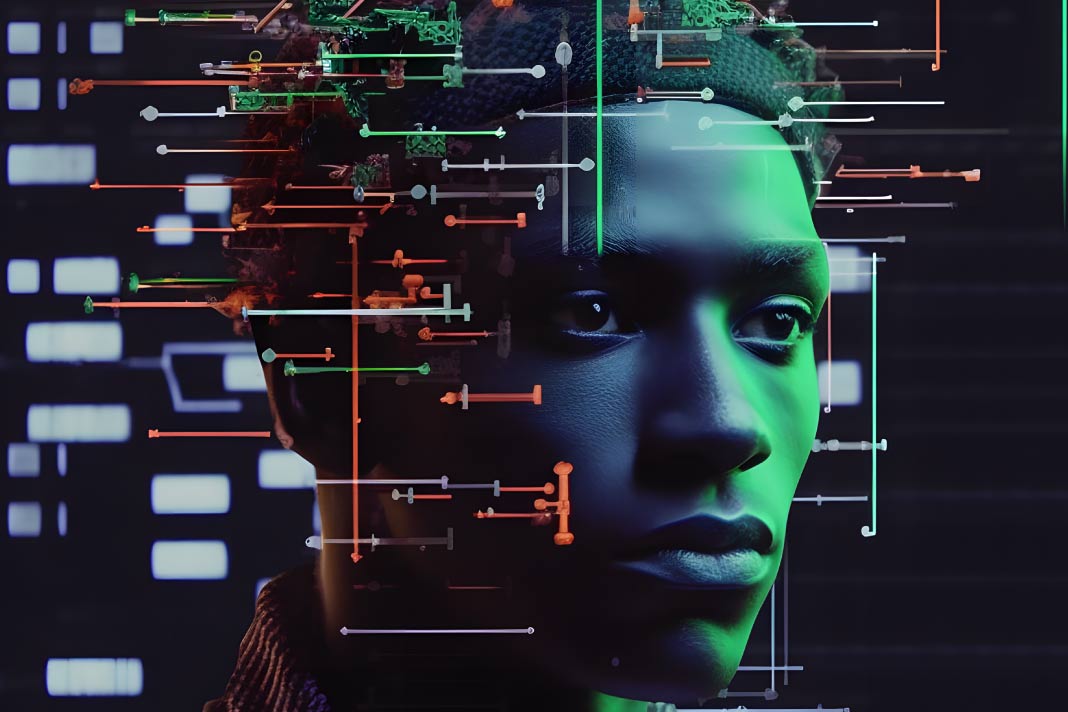

AI apocalypse averted? OpenAI unveils a safety plan for its powerful models. But can they be trusted?

OpenAI, the company behind the ultra-popular chatbot ChatGPT, has outlined strategies to avert any potential worst-case outcomes that could result from the potent artificial intelligence technology it’s developing. OpenAI has now released a 27-page “Preparedness Framework” document this week.

The document outlines its strategies for tracking, assessing, and safeguarding against “catastrophic risks” from state-of-the-art AI models. These risks could include the utilization of AI models for mass cyber disruption or even the fabrication of biological, chemical, or nuclear weapons.

Under the new framework, the decision-making power to release new AI models rests with OpenAI’s company leadership. However, the board of directors holds the ultimate authority and the “right to reverse decisions” OpenAI leadership makes.

Besides this, OpenAI states its AI models would have to pass numerous safety checks before reaching a point where the board would need to veto potentially risky AI model deployment. A specialized “preparedness” team will spearhead efforts to monitor and mitigate potential perils from advanced AI models within OpenAI.

MIT professor Aleksander Madry, currently on leave from the institute, will lead the startup’s preparedness team. He will oversee a team of researchers responsible for evaluating and closely monitoring potential risks and compiling these risks into scorecards. The scorecards will categorize risks as “low,” “medium,” “high,” or “critical.”

According to the preparedness framework, “only models with a post-mitigation score of ‘medium’ or below can be deployed,” only models with a “post-mitigation score of ‘high’ or below can be developed further.”

It’s important to note that the document is currently in “beta,” meaning it’s a work in progress. OpenAI plans to update it regularly based on the feedback received.

The framework brings renewed focus to the unique governance structure at OpenAI, a leading artificial intelligence startup that recently underwent significant board changes following a corporate dispute. The conflict resulted in CEO Sam Altman’s dismissal and reinstatement within five days.

This highly observed corporate incident raised concerns about Altman’s authority over the company he co-founded and the perceived restrictions that the board had over him and his leadership team.

The existing board, which OpenAI labels as “initial” and currently undergoing expansion, consists of three affluent white men. They face the significant responsibility of ensuring OpenAI’s most advanced technology effectively fulfills its mission to benefit all of humanity.

The lack of diversity on OpenAI’s interim board has received widespread criticism. Critics have also expressed concerns about relying solely on businesses to self-regulate, stressing the importance of government intervention to ensure the safe development and deployment of AI technologies.

OpenAI’s latest safety measures come amidst ongoing debates over the past year about the potential threat of an AI apocalypse.

Earlier this year, hundreds of distinguished AI scientists and researchers, including OpenAI’s Altman and Google Deepmind’s CEO Demis Hassabis, signed a one-sentence open letter stating that mitigating the “risk of extinction from AI” should be a global priority, alongside other dangers like “pandemics and nuclear war.”

Although the statement stirred public apprehension, some industry observers have accused companies of using distant catastrophic scenarios to divert attention from present challenges associated with AI tools.